Podcast: Play in new window | Download

Subscribe: Apple Podcasts | RSS

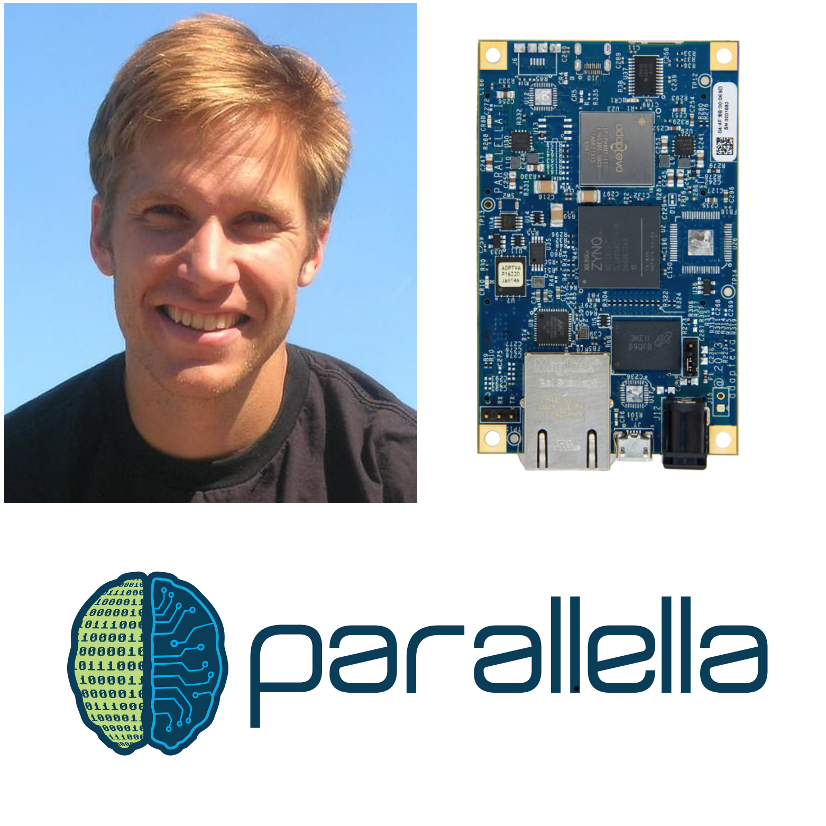

Welcome Andreas Olofsson of Adapteva!

- Adapteva are the makers of the stackable supercomputer called the Parallela. This uses the Epiphany chipset, which is the main product of Adapteva.

- The company is located outside of Boston.

- Before starting Adapteva, Andreas was a lead designer on the Analog Devices TigerSHARC DSP.

- The Epiphany architecture allows for rapid calculation of paralleled tasks.

- The Parallela has 3 tools on board, which are required for any good supercomputing system.

- A micro for the OS and easy interfacing and troubleshooting.

- A high performance coprocessor for handling heavy duty calculations (with GFLOPS/W)

- Flexible logic for handling interfacing and offloading other tasks from the main processor.

- The reason that licensing the Epiphany platform outright likely won’t work is the scale of SW add-ons that is required by industry.

- To get started with the Parallela

- Buy the board (it is carried on Amazon)

- Download the SDK

- Join the community to chat with others and learn about existing projects.

- There have been some interesting uses so far:

- Drone video footage processing (on the drone!)

- Matrix math

- FFTs

- Password cracking – bcrypt

- This all started back in Fall 2012 with a Kickstarter.

- The Adapteva blog is great as well. Chris enjoyed this post about Semiconductor Economics 101.

- Funding has been difficult as most VCs don’t like investing in chip companies.

- Andreas is always interested in partnerships. You can reach Andreas on twitter under his handle @Adapteva. You can also write to him at support at adapteva.com.

Thanks for the interesting links about the semiconductor market, I wanna discuss what everyone of us engineers is keen about 🙂 A citation from one of the articles:

The Internet of Things megatrend alone will result in a tremendous amount of new semiconductor innovation that in turn will likely lead to volume markets. Cisco Systems CEO John Chambers has predicted a $19-trillion market by 2020 resulting from Internet of Things applications.

I am an industrial electronic engineer, no consumer market, I see the world around me, hardly use a smartphone for other things than call and message. And there are quite some people like me, so I would welcome anyone with a really good idea on how the IoT would ever become a megatrend? I am in germany its a megatrend if you read certain press releases, but other than that….

We got a OralB electric toothbrush which tells your phoneapp what you did with it… marketing strikes again, no sensible reasons behind it.

So either we find some good cause for IoT or we burry it where it should be, where noone ever can find it again 🙂

Looking forward to listen to your cast.

Seems like Digilent would be a good channel to distribute the Parallela through. I was a backer just to get both processors on the same board at the same time – great idea.

All big honking “general purpose” floating-point DSPs lose to FPGAs also due to the possibility of massive parallelization. Despite this, some of the other manufacturers (NXP, Freescale, TI, etc.) are still on the market with massively “general purpose” parallel DSPs. Unfortunately the market for pure DSP cores is shrinking fast.The Parallela board gets it right with the addition of a general purpose processor.

As for the chip design itself, in general manufacturers use Quickturn systems for pre-silicon validation with the advantages that Andreas mentioned to validate buses, subsystems, etc. However, these things cost a pretty penny not only for the machine itself, but also for the infrastructure around it. I am very impressed he got a good processor out without one.

All in all, great episode. Andreas’ stance against the establishment by pursuing forward with his passion is really inspiring. It sort of gives the same feel when Chuck Peddle left Motorola to pursue his own low-cost chip. All the best to you.

Parallela is a chip without a purpose :/ and startup not hiring means they failed and will be folding soon.

phone – thats what GPGPU is for, every phone has one, Google has a standard (http://developer.android.com/guide/topics/renderscript/compute.html) and noone will add another chip when they get it for free on the SoC GPU. You really struggled with cpu questions, should of got another guest with strong cs background to throw some relevant ones.

An old teacher had a saying about folks who make assumptions.

No, we are not about to fold!

Rasz is one of our resident trolls… we like him, but don’t mind him 😉

While the comments re: startups are definitely troll-y, rasz actually raises a few technical points in the comparison to GPUs that are very much on point. I would very much like to hear Andreas’ view on these topics.

First, his point regarding smartphones is very true. Every smartphone already has a GPU, and all of them mid-tier and higher are GPGPU capable. So, the area for those GPUs is already a sunk cost from the manufacturer’s perspective. Plus, these mobile GPUs are generally *more* FLOPS/watt efficient than an Nvidia part, not less. All in all, the bar for a smartphone manufacturer to include an Epiphany in addition to their GPGPU would be pretty high, since it seems like most problems that would be solved well by the Epiphany would also be solved fairly well by GPGPUs.

Second, the growth areas Andreas forecast for Epiphany (image and vision processing) are traditionally areas where GPUs perform very well, and *not* because of their FLOPS or FLOPS/watt. Image and vision processing task are often more limited by the rate your processor (GPU or otherwise) can stream pixels in and out. GPUs have specialized hardware units on both the input and output sides that dramatically accelerate these aspects: texture sampling units for input and specialized frame buffer storage for output. For example, a GPU will typically be capable of both directly reading from and directly writing to compressed image formats. This translates to dramatically better pixel throughput, as each pixel consumes fewer bits of transfer, fits in caches better, etc. They also typically support a wide range of “weird” pixel arrangements in memory, effectively removing any addressing arithmetic or bit-slicing costs from reading from/writing to whatever weird pixel format your imaging sensor provides.

from the mathematical point of view, computer-vision by using gpu only is not making use of all the mathematical tools available. of course gpu must collect data at a blazing fast rate, but further processing is computation-intensive with little data-exchange. i.e. if you recognized the human limbs and represent them by a stick-figure, actual recognition of social situations, aggression and such, this definitely is no task for gpu. similarily a cell-phone might want to predict the user’s wishes instead of merely pattern-match the images. if the cell-phone is to help people predict psysics (like giving hints for pool-players), gpu is not much help there. and as andreas is always saying, cpu is not the answer either since there is a limit how fast it can go without excessive cooling. so parallell coprocessors do have a future, the question is just if adapteva will provide such, or rather if their coprocessor will actually receive help from the open-source community. if there are no programs which actually can perform reliable gesture recognition, phones and tv-sets will never be controlled that way. someone told me: as long as it was new, the multitouch display had actually some coolness-factor. but now that it’s old, there simply hasn’t been any improvements of that technology! I’ve seen many people switching from phones with big display to phones with actual keys, as they grew older…

as for the 1st point, that problems solved by epiphany could also be solved by gpgpu, sadly this is true, but not due to a structural problem in epiphany or something, but because there exist no programmers who could make use of epiphany cores without resorting to uploading a single program to all cores and letting it perform one and the same task on different data-sets. famous example here is gcc: should you compile multiple source-files at the same time, or should you rather make use of the available cores for compiling a single sourcecode not just faster but also much more thoroughly optimized?

same with ray-tracing: wouldn’t it be better to keep the changeless data and compile multiple frames of a movie more centrally, instead of splitting this task to multiple instances of the ray-tracer thereby doing the same things over and over again? IMHO the solution is to hire some threads for actually organizing how the other threads are working in parallell. wont bring much efficiency if you only have 4 threads in total, but parallella’s 18 cores or amd’s 36 cores definitely need some management…

Have you actually worked on any of: a computer vision stack, a compiler, or a raytracer? Because I’ve worked on all three, and most of what you’re claiming is bunk. What you’re writing sounds like someone who has a naive understanding of parallel programming without any hands-on knowledge of the practice.

Traditional vision stacks are very heavy on GPU-friendly image processing at the front of the pipe; once you’re past that, the later stages (stick-figures, as you say), typically aren’t performance intensive, so the loss of GPU acceleration doesn’t matter. More modern vision stacks tend to be built on deep learning architectures, particularly Convolutional Neural Networks, which are again very GPU friendly.

Compilers are almost always parallelized at the source file level because it requires dramatically less synchronization overhead (just a single barrier at the end), and because the available parallelism is typically much higher. There are lots of projects with hundreds of source files, but very few source files with more than a handful of functions.

Raytracing is the most plausible of the ones you’ve given to been good on Epiphany (though it would still be hurt by the lack of texture samplers and accelerated frame buffers). GPUs typically struggle here because they are essentially vector machines and don’t cope well with rays diverging on bounce. Epiphany might do better at ray intersection (but not shading) than a typical GPU by virtue of being truly MIMD, but I suspect it would run into its own problems in the memory bandwidth department. Ray intersection is essentially a B-tree traversal, and the trees are typically huge, i.e. not possible to replicate into the scratchpad of each core, and the nature of the algorithm prevents easy sharding of the tree. Maybe there’s a way to pipeline the traversal across multiple cores that would allow sharding to work, but you’re firmly into doctoral thesis-level research there.

I’m aware of what you said here, didn’t know others were not. thanks for rectifying that. so you’re basically saying here you’re in doctoral thesis-level research when you use mimd instead of simd of a gpu? well, to me this isn’t surprising and exactly what I tried to express. again thanks for distilling that major argument why programmers with a university degree should actually buy that board. be it processing of stick-figures, parallellism in compiling sourcecode, or calculating surface-images from 3d-textures encoded in complicated algorithms (as is the case in a very simplicistic form for the program povray), all these ideas haven’t been researched much because many-core mimd didn’t exist till recently. what has been well-researched is algorithms for using gpu and simd-programming, so it’s no surprise in all these areas that’s the algorithms which are getting used.

I indeed have no experience in programming all these things, but I know the maths behind it, and I know there is a lot of possibilities in maths which hasn’t been researched yet in that respect. since there is no research adapteva will likely die and some other company will copy their idea and get the success from that as soon as the research has been done. I really hope this big waste of time wont happen…

As someone who’s not very familiar with this space, it kind of seems like xmos would be a close competitor, no? Aren’t they also in the distributed/parallel computing chip business?

The talk about I/O got me thinking if an fpga is the only solution for fast i/o? For example, I have an application where I would love to drive a pattern from memory at 200Mhz.. having the resolution where any output can be shifted by a .5ns.

I do like the parallella eval board (arm, fpga, and epiphany core chip). Seems like a nice platform to learn parallel computing!

xmos xcore is a fake multicore, internally its one/two very fast RISK CPUs (1 ipc 500MHz = 500/1000mips chips) divided into virtual threads ( https://en.wikipedia.org/wiki/Barrel_processor ). Fabulous when you need fast IO (for example can bitbang 100Mbit ethernet mii/rmii phy) but dont want to learn fpga programming, tons of dedicated hard blocks serializers/deserializers/timers/counters you name it. Sound people love them (bitbanging various serial audio interfaces).

Parallax Propeller is a closer comparison, its a true multiprocessor chip. Never played with one due to european prices and availability, plus version 2 is “almost ready” for couple of years now 🙂

epiphany IO requirements pretty much kill any usage scenarious you might have, you will always need hot power hungry expensive FPGA next to one of those(no memory controller, cant work standalone), at this point why not just plop cheap arm SoC with strong programmable GPU?

There’s a nice reddit post that talks about chip building:

http://np.reddit.com/r/AskEngineers/comments/3adekw/how_are_plans_of_huge_asics_stored_you_dont/csbnuuw